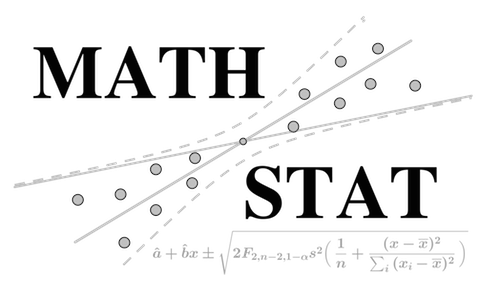

Heidelberg - Paris Workshop on Mathematical Statistics

Scientific Programm

Monday, January 27

Session: Monday, 9:30 - 11:00

Speakers:

-

Julien Chhor - "Generalized multi-view models: Adaptive density estimation under low-rank

constraints"

Show Abstract

Abstract: We study the problem of bivariate discrete or continuous probability density estimation under low-rank constraints. For discrete distributions, we assume that the two-dimensional array to estimate is a low-rank probability matrix. In the continuous case, we assume that the density with respect to the Lebesgue measure satisfies a generalized multi-view model, meaning that it is beta Hölder and can be decomposed as a sum of K components, each of which is a product of one-dimensional functions. In both settings, we propose estimators that achieve, up to logarithmic factors, the minimax optimal convergence rates under such low-rank constraints. In the discrete case, the proposed estimator is adaptive to the rank K. In the continuous case, our estimator converges with the rate min((K/n)^{beta/(2beta+1)}, n^{-beta/(2beta+2)}) up to logarithmic factors, and it is adaptive to the unknown support as well as to the smoothness and to the unknown number of separable components K. We present efficient algorithms for computing our estimators. This is a joint work with Olga Klopp and Alexandre Tsybakov.

-

Fabienne Comte - "Nonparametric estimation for additive concurrent regression models"

Show Abstract

Abstract: Consider an additive functional regression model where a one-dimensional response process $Y(t)$ and a $K$-dimensional explanatory random process $X_{j}(t)$, $j=1, \dots, K,$ are observed for $t\in [0,\tau]$, with fixed $\tau$. Examples of such explanatory processes are continuous or inhomogeneous counting processes. The linear coefficients of the model are $K$ unknown deterministic functions $t\mapsto b_j(t)$, $j=1, \dots, K$, $t\in [0,\tau]$. From $N$ independent trajectories, we build a nonparametric least-squares estimator $(\hat b_{1},\ldots, \hat b_{K})$ of $(b_1,\ldots,b_{K})$, where each $\hat b_{j}$, $1\le j\le K,$ is given by its development on a finite-dimensional space. We prove a bound on the mean-square risk of the estimator, from which rates of convergence are obtained and are established to be optimal. An adaptive procedure, achieving simultaneous and anisotropic selection of each space dimension, is then tailored and an oracle risk bound is proved. The procedure is studied numerically and implemented on a real dataset of electric consumption.

Session: Monday, 11:30 - 13:00

Speakers:

-

Pierre Alquier - "PAC-Bayes bounds: understanding the generalization of Bayesian learning

algorithms - Part 1"

Show Abstract

Abstract: The PAC-Bayesian theory provides tools to understand the accuracy of Bayes-inspired algorithms that learn probability distributions on parameters. This theory was initially developed by McAllester about 20 years ago, and applied successfully to various machine learning algorithms in various problems. Recently, it led to tight generalization bounds for deep neural networks, a task that could not be achieved by standard "worst-case" generalization bounds such as Vapnik-Chervonenkis bounds. In a first part, I will provide a brief introduction to PAC-Bayes bounds, explain the core ideas and the main applications. I will also provide an overview of the recent research trends. In a second time, I will discuss more theoretical aspects. In particular, I will highlight the application of PAC-Bayes bounds to derive minimax-optimal rates of convergence in classification and in regression, and the connection to mutual-information bounds.

-

Fanny Seizilles - "Inferring diffusivity from killed diffusion"

Show Abstract

Abstract: We consider the (reflected) diffusion of independent molecules in an insulated Euclidean domain with an unknown diffusivity parameter. At a random time and position, the molecules may bind and stop diffusing according to a given 'binding' potential. The binding process can be modeled by an additive random functional corresponding to the canonical construction of a 'killed' diffusion Markov process. We are interested in the inference on the infinite-dimensional diffusion parameter from a histogram plot of the killing positions of the process. We show that these positions follow a Poisson point process, with intensity determined by the solution of a certain Schrödinger equation. The inference problem can then be re-cast as a non-linear inverse problem for this PDE, which we show can be solved consistently in a Bayesian way under natural conditions on the initial state of the diffusion, and if the binding potential is not too 'aggressive'. We also obtain novel posterior contraction rate results for high-dimensional Poisson count data of independent interest. (Joint work with Richard Nickl)

Session: Monday, 14:30 - 16:00

Speakers:

-

Mohamed Ndaoud (Simo) - "On some recent advances in high dimensional binary sub-Gaussian mixture models

- Part 1"

Show Abstract

Abstract: High-dimensional sub-Gaussian mixture models are a powerful tool for modeling data clustering. Recent advancements have significantly addressed the challenges of label recovery and center estimation, revealing several intriguing phase transitions in these problems. In the first lecture of this tutorial, we will delve into the latest progress on both label and center recovery, focusing on scenarios where the labels are independent. In the second lecture, we will explore emerging research directions in sub-Gaussian mixture models, including the challenge of center estimation under a Hidden Markov structure for the labels. If time permits, we will also discuss an interesting phase transition in classification involving the rejection option."

-

Volodia Spokoiny - "Statistical inference for Deep Neuronal Networks"

Show Abstract

Abstract:The talk focuses on the parameter estimation problem for Deep Neuronal Networks (DNN) from a labeled dataset (training sample). The main result describes a finite sample expansion for the maximum likelihood estimator, the remainder is given in terms of efficient dimension. This expansion can be used for inference about the DNN structure, it also yields sharp finite sample risk bounds. An upper bound for the efficient dimension is discussed in the «small noise» case.

Session: Monday, 16:30 - 18:00

Speakers:

-

Suhasini Subba Rao - "Conditionally specified stationary stochastic processes for graphical modeling of multivariate time series"

Show Abstract

Abstract: Graphical models have become an important tool for summarising conditional relations in a multivariate time series. Typically, the partial covariance is used as a measure of conditional dependence and forms the basis for construction of the interaction graph. However, for many real time series the outcomes may not be Gaussian and/or could be a mixture of different outcomes. For such data using the partial covariance as a measure conditional dependence may lead to misleading results. The aim of this talk is to develop graphical models for non-Gaussian, possibly mixed response time series. We propose a broad class of time series models which are specifically designed to succinctly encode the graphical model in its coefficients. For each univariate component in the time series we model its conditional distribution with a distribution from the exponential family. We show that the non-zero coefficients in the conditional specification encode the conditionally interactions between the multivariate time series, which yields the process-wide conditional graph. As the model is not specified by a Markov transition kernel, new techniques are developed to analyze the model. In particular, we derive conditions under which the conditional specification leads to a well defined strictly stationary time series that is geometrically mixing. We conclude this talk by explaining how the Gibbs sampler can be used to obtain "near stationary" realisations from the process. Joint with with Anirban Bhattacharya.

-

Claudia Strauch - "Statistical guarantees for denoising reflected diffusion models"

Show Abstract

Abstract: In recent years, denoising diffusion models have become a crucial area of research due to their prevalence in the rapidly expanding field of generative AI. While recent statistical advances have provided theoretical insights into the generative capabilities of idealised denoising diffusion models for high-dimensional target data, practical implementations often incorporate thresholding procedures for the generating process to address problems arising from the unbounded state space of such models. This mismatch between theoretical design and practical implementation of diffusion models has been explored empirically by using a reflected diffusion process as the driver of noise instead. In this talk, we investigate statistical guarantees of these denoising reflected diffusion models. In particular, we establish minimax optimal rates of convergence in total variation up to a polylogarithmic factor under Sobolev smoothness assumptions. Our main contributions include a rigorous statistical analysis of this novel class of denoising reflected diffusion models, as well as a refined methodology for score approximation in both time and space, achieved through spectral decomposition and rigorous neural network analysis. This talk is based on joint work with Asbjørn Holk and Lukas Trottner.

Tuesday, January 27

Session: Tuesday, 9:00 - 10:30

Speakers:

-

Shayan Hundrieser - "Statistical unbalanced optimal transport"

Show Abstract

Abstract: Statistical optimal transport has become an emerging topic for the analysis of complex and geometric data. A typical assumption for its theoretical analysis is that data are drawn i.i.d. from some probability distributions. This, however, is often challenged in applications where modifications of optimal transport (unbalanced optimal transport, UOT) are successfully applied to situations when the underlying data do not come from a probability measure. This hinders a statistical analysis due to the lack of a valid random mechanism. In this talk we provide several statistical models where UOT becomes meaningful and develop first statistical theory for it. Specifically, we analyze extensions of the Kantorovich-Rubinstein (KR) transport for finitely supported measures. The KR transport depends on a penalty which serves as a relaxation from finding true couplings between the marginal measures. The main result is a non-asymptotic bound on the expected error for the empirical KR distance as well as for its barycenters. Depending on the penalty we find phase transitions, in analogue to the unbalanced case. Our approach justifies simple randomized computational schemes for UOT which can be used for fast approximate computations in combination with any exact solver. Using synthetic and real datasets, we empirically analyze the empirical UOT in simulation studies and in some examples from cell biology.

-

Alexander Kreiß - "Modelling sparse influence networks with Hawkes process while controlling for

global influence"

Show Abstract

Abstract: We consider a network of vertices who are able to cast events. The events cast by a given vertex are supposed to be noted by the neighbouring vertices, that is, those vertices that are connected through an edge in the network. Our basic modelling assumption is that the activity of a vertex increases if its neighbours cast many events. Therefore, the unknown network encodes the peer effects. However, there might also be other (observed) information which increases or decreases the activity of all vertices simultaneously. We call these global effects. We suggest to model such type of data via a Hawkes process that incorporates both peer and global effects. Our aim is to make inference for the global effects while controlling for the unknown peer effects. We achieve this by estimating both effects simultaneously. Since sparsity in the network, i.e., the peer effects, is plausible, we use a LASSO penalty. To be able to make inference about the global effects, we adopt the de-biasing technique from LASSO regression to our set-up. This is joint work together with Enno Mammen and Wolfgang Polonik.

Session: Tuesday, 11:00 - 12:30

Speakers:

-

Pierre Alquier - "PAC-Bayes bounds: understanding the generalization of Bayesian learning

algorithms - Part 2"

Show Abstract

Abstract: The PAC-Bayesian theory provides tools to understand the accuracy of Bayes-inspired algorithms that learn probability distributions on parameters. This theory was initially developed by McAllester about 20 years ago, and applied successfully to various machine learning algorithms in various problems. Recently, it led to tight generalization bounds for deep neural networks, a task that could not be achieved by standard "worst-case" generalization bounds such as Vapnik-Chervonenkis bounds. In a first part, I will provide a brief introduction to PAC-Bayes bounds, explain the core ideas and the main applications. I will also provide an overview of the recent research trends. In a second time, I will discuss more theoretical aspects. In particular, I will highlight the application of PAC-Bayes bounds to derive minimax-optimal rates of convergence in classification and in regression, and the connection to mutual-information bounds.

-

Arnak Dalalyan - "Parallelized Midpoint Randomization for Langevin Monte Carlo"

Show Abstract

Abstract: We explore the sampling problem within the framework where parallel evaluations of the gradient of the log-density are feasible. Our investigation focuses on target distributions characterized by smooth and strongly log-concave densities. We revisit the parallelized randomized midpoint method and employ proof techniques recently developed for analyzing its purely sequential version. Leveraging these techniques, we derive upper bounds on the Wasserstein distance between the sampling and target densities. These bounds quantify the runtime improvement achieved by utilizing parallel processing units, which can be considerable.

Session: Tuesday, 14:00 - 15:30

Speakers:

-

Mohamed Ndaoud (Simo) - "On some recent advances in high dimensional binary sub-Gaussian mixture models

- Part 2"

Show Abstract

Abstract: High-dimensional sub-Gaussian mixture models are a powerful tool for modeling data clustering. Recent advancements have significantly addressed the challenges of label recovery and center estimation, revealing several intriguing phase transitions in these problems. In the first lecture of this tutorial, we will delve into the latest progress on both label and center recovery, focusing on scenarios where the labels are independent. In the second lecture, we will explore emerging research directions in sub-Gaussian mixture models, including the challenge of center estimation under a Hidden Markov structure for the labels. If time permits, we will also discuss an interesting phase transition in classification involving the rejection option."

-

Martin Wahl - "Statistical analysis of empirical graph Laplacians"

Show Abstract

Abstract: Laplacian Eigenmaps and Diffusion Maps are nonlinear dimensionality reduction methods that use the eigenvalues and eigenvectors of (un)normalized graph Laplacians. Both methods are applied when the data is sampled from a low-dimensional manifold, embedded in a high-dimensional Euclidean space. In addition, higher-order generalizations of graph Laplacians (so-called Hodge Laplacians) allow to deduce more sophisticated topological information. From a mathematical perspective, the main problem is to understand these empirical Laplacians as spectral approximations of the Laplace-Beltrami operators on the underlying manifold. In this talk, we will first study graph Laplacians based on i.i.d. observations uniformly distributed on a compact submanifold of the Euclidean space. In our analysis, we connect these empirical Laplacians to kernel principal component analysis. This leads to novel points of view and allows to leverage results for empirical covariance operators in infinite dimensions. We will then discuss higher-order generalizations of graph Laplacians, and show how they are connected to Hodge theory on Riemannian manifolds.

Session: Tuesday, 16:00 - 17:30

Speakers:

-

Mathias Trabs - "Towards statistically reliable uncertainty quantification for neural networks"

Show Abstract

Abstract: An essential feature in modern data science, especially in machine learning as well as high-dimensional statistics, are large sample sizes and large parameter space dimensions. As a consequence, the design of methods for uncertainty quantification is characterized by a tension between numerically feasible and efficient algorithms and approaches which satisfy theoretically justified statistical properties. In this talk we discuss a Bayesian MCMC-based method with a stochastic Metropolis-Hastings step as a potential solution. By calculating acceptance probabilities on batches, a stochastic Metropolis-Hastings step saves computational costs, but reduces the effective sample size. We show that this obstacle can be avoided by a simple correction term. We study statistical properties of the resulting stationary distribution of the chain if the corrected stochastic Metropolis-Hastings approach is applied to sample from a Gibbs posterior distribution in a nonparametric regression setting. Focusing on neural network regression, we prove a PAC-Bayes oracle inequality which yields optimal contraction rates and we analyze the diameter and investigate the coverage probability of the resulting credible sets. The talk is based on joint work with Sebastian Bieringer, Gregor Kasieczka and Maximilian F. Steffen.

-

Sven Wang - "Statistical learning theory for neural operators"

Show Abstract

Abstract: We present statistical convergence results for the learning of mappings in infinite-dimensional spaces. Given a possibly nonlinear map between two separable Hilbert spaces, we analyze the problem of recovering the map from noisy input-output pairscorrupted by i.i.d. white noise processes or subgaussian random variables. We provide a general convergence results for least-squares-type empirical risk minimizers over compact regression classes, in terms of their approximation properties and metric entropy bounds, proved using empirical process theory. This extends classical results in finite-dimensional nonparametric regression to an infinite-dimensional setting. As a concrete application, we study an encoder-decoder based neural operator architecture. Assuming holomorphy of the operator, we prove algebraic (in the sample size) convergence rates in this setting, thereby overcoming the curse of dimensionality. To illustrate the wide applicability of our results, we discuss a parametric Darcy-flow problem on the torus.

Wednesday, January 28

Session: Wednesday, 9:00 - 10:30

Speakers:

-

Angelika Rohde - "The level of self-organized criticality in oscillating Brownian motion: Stable limiting distribution theory for the MLE"

Show Abstract

Abstract: For some discretely observed path of oscillating Brownian motion with level of self-organized criticality $\rho_0$, we prove in the infill asymptotics that the MLE is $n$-consistent, where $n$ denotes the sample size, and derive its limit distribution with respect to stable convergence. As the transition density of this homogeneous Markov process is not even continuous in $\rho_0$, interesting and somewhat unexpected phenomena occur: The likelihood function splits into several components, each of them contributing very differently depending on how close the argument $\rho$ is to $\rho_0$. Correspondingly, the MLE is successively excluded to lay outside a compact set, a $1/\sqrt{n}$-neighborhood and finally a $1/n$-neigborhood of $\rho_0$ asymptotically. Sequentially and as a process in $\rho$, the martingale part of the suitably rescaled local log-likelihood function exhibits a bivariate Poissonian behavior in the stable limit with its intensity being a function of the local time at $\rho_0$. (Joint work with Johannes Brutsche)

-

Tilmann Gneiting - "Isotonic Distributional Regression"

Show Abstract

Abstract: The ultimate goal of regression analysis is to model the conditional distribution of an outcome, given a set of explanatory variables or covariates. This approach is called "distributional regression", and marks a clear break from the classical view of regression, which has focused on estimating a conditional mean or quantile only. Isotonic Distributional Regression (IDR) learns conditional distributions that are simultaneously optimal relative to comprehensive classes of relevant loss functions, subject to monotonicity constraints in terms of a partial order on the covariate space. This IDR solution is exactly computable and does not require approximations nor implementation choices, except for the selection of the partial order. In case studies and benchmarks problems, IDR is competitive with state-of-the-art methods for uncertainty quantification (UQ) in modern neural network learning. Joint work with Alexander Henzi, Eva-Maria Walz, and Johanna Ziegel. Links: https://academic.oup.com/jrsssb/article/83/5/963/7056107 https://epubs.siam.org/doi/abs/10.1137/22M1541915

Session: Wednesday, 11:00 - 12:30

-

Kabir Verchand - "Estimation beyond missing (completely) at random"

Show Abstract

Abstract: Modern data pipelines are growing both in size and complexity, introducing tradeoffs across various aspects of the associated learning challenges. A key source of complexity, and the focus of this talk, is missing data. Estimators designed to handle missingness often rely on strong assumptions about the mechanism by which data is missing, such as that the data is missing completely at random (MCAR). By contrast, real data is rarely MCAR. In the absence of these strong assumptions, can we still trust these estimators? In this talk, I will present a framework that bridges the gap between the MCAR and assumption-free settings. This framework reveals an inherent tradeoff between estimation accuracy and robustness to modeling assumptions. Focusing on the fundamental task of mean estimation, I will then present estimators which optimally navigate this tradeoff, offering both improved robustness and performance.

-

Markus Reiß - "Early stopping for regression trees"

Show Abstract

Abstract: Classification and regression trees find widespread applications in statistics, but their theoretical analysis is notoriously difficult. A standard procedure to determine the tree builds a large tree and then uses pruning algorithms to reduce the tree and to avoid overfitting. We propose a different approach based on early stopping where the tree is only grown until the residual norm drops under a certain threshold. As basic growing algorithms we consider the classical global and a new semi-global approach. We prove an oracle-type inequality for the early stopping prediction error compared to the (random, parameter-dependent) oracle tree along the tree growing procedure. The error due to early stopping is usually negligible when the tree is built from an independent sample and it is at most of the same order as the standard risk bound without sample splitting. Numerical examples confirm good statistical performance and high computational gains compared to classical methods. (joint with Ratmir Miftachov, Berlin)